Department of Communication and Media researchers win 2024 Purdue Best Paper Award

Published on

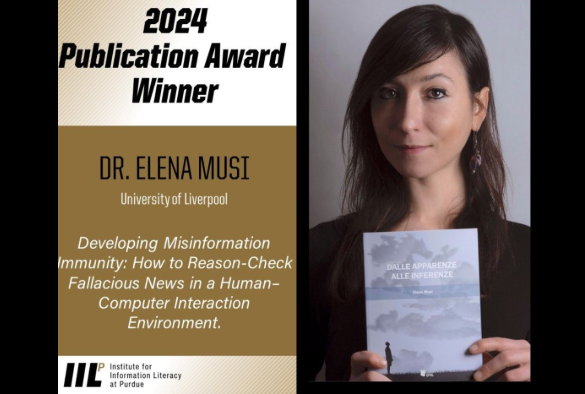

Academics from the University of Liverpool’s Department of Communication and Media have been awarded the 2024 Purdue Best Paper Award for their innovative research into fake news.

The paper, presented by lead author Dr Elena Musi, detailed how the researchers have developed two open-access chatbots: the fake news immunity chatbot and the vaccinating news chatbot. These aim to ensure both citizens and journalists have the tools to challenge misinformation.

Using Fallacy Theory and Human Computer Interaction, the team developed the chatbots to provide citizens with the means to act as fact checkers and communication gatekeepers (such as journalists) with the means to avoid creating and spreading misleading news.

The chatbots, presented in the form of a game with avatars from ancient Greece asking questions, establish critical thinking skills and improve media literacy. You can see how the chatbots work and learn more through videos here.

Entitled "Developing Misinformation Immunity: How to Reason-Check Fallacious News in a Human–Computer Interaction Environment," the paper is a key output from the UKRI funded research project from the University of Liverpool Being Alone together: Developing Fake News Immunity. This important project aimed to provide citizens with the means to act as fact checkers.

The award for the research, from the Institute for Information Literacy at Purdue University in America, highlights excellence in information literacy scholarship that results in significant developments and innovation in information literacy models addressing information challenges.

The research from the team formed by Dr Elena Musi, Professor Simeon Yates, Professor Kay O’Halloran, Dr Elinor Carmi and Professor Chris Reed was selected from a competitive field of top papers from around the world about information literacy.

Dr Elena Musi said: “In this paper we propose the first framework to assess the effectiveness of pre-bunking digital tools in bolstering critical thinking skills within the contemporary misinformation landscape. Our findings indicate that teaching how to recognize misleading arguments within an interactive inoculation setting leads to heightened resilience against misinformation.”

The team is currently engaged in a separate EMIF-funded research project titled 'Leveraging Argument Technology for Impartial Fact-Checking' which aims to mitigate cognitive biases inherent in the process of verifying information online. The project will be complete later this year.